Lookup tagger (5.4.3-5.4.4)

Lookup tagger:

>>> fd = nltk.FreqDist(brown.words(categories='news')) >>> cfd = nltk.ConditionalFreqDist(brown.tagged_words(categories='news')) >>> most_freq_words = fd.keys()[:100] >>> likely_tags = dict((word, cfd[word].max()) for word in most_freq_words) >>> baseline_tagger = nltk.UnigramTagger(model=likely_tags) >>> baseline_tagger.evaluate(brown_tagged_sents) 0.45578495136941344

Need to go for detail to understand.

>>> most_freq_words ['the', ',', '.', 'of', 'and', 'to', 'a', 'in', 'for', 'The', 'that', '``', 'is', 'was', "''", 'on', 'at', 'with', 'be', 'by', 'as', 'he', 'said', 'his', 'will', 'it', 'from', 'are', ';', '--', 'an', 'has', 'had', 'who', 'have', 'not', 'Mrs.', 'were', 'this', 'which', 'would', 'their', 'been', 'they', 'He', 'one', 'I', 'but', 'its', 'or', ')', 'more', 'Mr.', '(', 'up', 'all', 'last', 'out', 'two', ':', 'other', 'new', 'first', 'than', 'year', 'A', 'about', 'there', 'when', 'In', 'after', 'home', 'also', 'It', 'over', 'into', 'But', 'no', 'made', 'her', 'only', 'years', 'three', 'time', 'them', 'some', 'New', 'can', 'him', '?', 'any', 'state', 'President', 'before', 'could', 'week', 'under', 'against', 'we', 'now']

most_freq_words simply selects top 100 frequently used words. Then most frequently used tag is assigned to those top 100 words in likely_tags.

>>> likely_tags

{'all': 'ABN', 'over': 'IN', 'years': 'NNS', 'against': 'IN', 'its': 'PP$', 'before': 'IN', '(': '(', 'had': 'HVD', ',': ',', 'to': 'TO', 'only': 'AP', 'under': 'IN', 'has': 'HVZ', 'New': 'JJ-TL', 'them': 'PPO', 'his': 'PP$', 'Mrs.': 'NP', 'they': 'PPSS', 'not': '*', 'now': 'RB', 'him': 'PPO', 'In': 'IN', '--': '--', 'week': 'NN', 'some': 'DTI', 'are': 'BER', 'year': 'NN', 'home': 'NN', 'out': 'RP', 'said': 'VBD', 'for': 'IN', 'state': 'NN', 'new': 'JJ', ';': '.', '?': '.', 'He': 'PPS', 'be': 'BE', 'we': 'PPSS', 'after': 'IN', 'by': 'IN', 'on': 'IN', 'about': 'IN', 'last': 'AP', 'her': 'PP$', 'of': 'IN', 'could': 'MD', 'Mr.': 'NP', 'or': 'CC', 'first': 'OD', 'into': 'IN', 'one': 'CD', 'But': 'CC', 'from': 'IN', 'would': 'MD', 'there': 'EX', 'three': 'CD', 'been': 'BEN', '.': '.', 'their': 'PP$', ':': ':', 'was': 'BEDZ', 'more': 'AP', '``': '``', 'that': 'CS', 'but': 'CC', 'with': 'IN', 'than': 'IN', 'he': 'PPS', 'made': 'VBN', 'this': 'DT', 'up': 'RP', 'will': 'MD', 'can': 'MD', 'were': 'BED', 'and': 'CC', 'is': 'BEZ', 'it': 'PPS', 'an': 'AT', "''": "''", 'as': 'CS', 'at': 'IN', 'have': 'HV', 'in': 'IN', 'any': 'DTI', 'no': 'AT', ')': ')', 'when': 'WRB', 'also': 'RB', 'other': 'AP', 'which': 'WDT', 'President': 'NN-TL', 'A': 'AT', 'I': 'PPSS', 'who': 'WPS', 'two': 'CD', 'The': 'AT', 'a': 'AT', 'It': 'PPS', 'time': 'NN', 'the': 'AT'}

>>>

For non-top100 words, no tag is assigned (None) as below.

>>> sent = brown.sents(categories='news')[3] >>> baseline_tagger.tag(sent) [('``', '``'), ('Only', None), ('a', 'AT'), ('relative', None), ('handful', None), ('of', 'IN'), ('such', None), ('reports', None), ('was', 'BEDZ'), ('received', None), ("''", "''"), (',', ','), ('the', 'AT'), ('jury', None), ('said', 'VBD'), (',', ','), ('``', '``'), ('considering', None), ('the', 'AT'), ('widespread', None), ('interest', None), ('in', 'IN'), ('the', 'AT'), ('election', None), (',', ','), ('the', 'AT'), ('number', None), ('of', 'IN'), ('voters', None), ('and', 'CC'), ('the', 'AT'), ('size', None), ('of', 'IN'), ('this', 'DT'), ('city', None), ("''", "''"), ('.', '.')]

backoff option enables to add default tag ('NN' in this case).

>>> baseline_tagger = nltk.UnigramTagger(model=likely_tags, backoff=nltk.DefaultTagger('NN')) >>> baseline_tagger.tag(sent) [('``', '``'), ('Only', 'NN'), ('a', 'AT'), ('relative', 'NN'), ('handful', 'NN'), ('of', 'IN'), ('such', 'NN'), ('reports', 'NN'), ('was', 'BEDZ'), ('received', 'NN'), ("''", "''"), (',', ','), ('the', 'AT'), ('jury', 'NN'), ('said', 'VBD'), (',', ','), ('``', '``'), ('considering', 'NN'), ('the', 'AT'), ('widespread', 'NN'), ('interest', 'NN'), ('in', 'IN'), ('the', 'AT'), ('election', 'NN'), (',', ','), ('the', 'AT'), ('number', 'NN'), ('of', 'IN'), ('voters', 'NN'), ('and', 'CC'), ('the', 'AT'), ('size', 'NN'), ('of', 'IN'), ('this', 'DT'), ('city', 'NN'), ("''", "''"), ('.', '.')] >>>

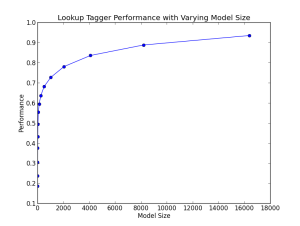

This one is to explain how large model size could be reasonable.

>>> def performance(ccd, wordlist): ... lt = dict((word, cfd[word].max()) for word in wordlist) ... baseline_tagger = nltk.UnigramTagger(model=lt, ... backoff=nltk.DefaultTagger('NN')) ... return baseline_tagger.evaluate(brown.tagged_sents(categories='news')) ... >>> def display(): ... import pylab, nltk ... words_by_freq = list(nltk.FreqDist(brown.words(categories='news'))) ... cfd = nltk.ConditionalFreqDist(brown.tagged_words(categories='news')) ... sizes = 2 ** pylab.arange(15) ... perfs = [performance(cfd, words_by_freq[:size]) for size in sizes] ... pylab.plot(sizes, perfs, '-bo') ... pylab.title('Lookup Tagger Performance with Varying Model Size') ... pylab.xlabel('Model Size') ... pylab.ylabel('Performance') ... pylab.show() ... >>> display()

When tried with top 100 words, the result of evaluation was around 0.45. During the model size is very small, let's say less than 2000, the performance is improved sharply, however, the pace of improvement is slow down later on.

This stats indicate that we don't have to "too high" size of models.