What is Tokenize?

As my learning NLTK environment seems ready, let's moving forward.

I set a one varialvle (para) to put 3 sentences.

>>> import nltk >>> para = "Hello World. It's good to see you. Thansk for buying this book." >>> para "Hello World. It's good to see you. Thansk for buying this book."

Then let's split into each sentence.

>>> from nltk.tokenize import sent_tokenize >>> sent_tokenize(para) ['Hello World.', "It's good to see you.", 'Thansk for buying this book.']

sent_tokenize seems an option a text to split into sentences.Of couse it can be instantilized and you can get the same result with followng way.

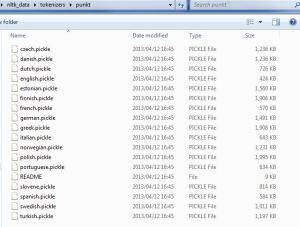

>>> tokennizer = nltk.data.load('tokenizers/punkt/english.pickle') >>> tokennizer.tokenize(para) ['Hello World.', "It's good to see you.", 'Thansk for buying this book.']

As long as seeing this, sent_tokenize is a kind of command to call english.pickle and tokenize a provided text. In the same folder, files for various languages are stored.

But no east Asia languages like Japanese and Chinese there. That should be caused by our languages in east Asians do not insert space between words.

There should be workaround and I will learn in future, I believe.

Under nltk.tokenize, there are several tools. First, I tried word_tokenize. This should split into each word level.

>>> from nltk.tokenize import word_tokenize >>> word_tokenize('Hello World.') ['Hello', 'World', '.'] >>> word_tokenize(para) ['Hello', 'World.', 'It', "'s", 'good', 'to', 'see', 'you.', 'Thansk', 'for', 'b uying', 'this', 'book', '.']

I recognized that period(.) was not speatered from some words. For example, "World." and "you.". On the otherhand, the word and period were separated in the last sentence. ("book" and ".")

Is that recognized as one sentence? Also tried two spaces between the sentences, but results were same.

(to be continued...)